Have You Ever Seen A Ballot For The U.S. News IP Specialty Ranking? Here It Is…

The U.S. News rankings of law schools casts a long shadow on the legal education industry. Although the “general” rankings get most of the attention, U.S. News also publishes rankings of various specializations–including a ranking of “intellectual property” programs. Anecdotally, over the years a number of Santa Clara Law high tech students have told me that the specialty rankings influenced their decision to come to SCU.

The U.S. News rankings of law schools casts a long shadow on the legal education industry. Although the “general” rankings get most of the attention, U.S. News also publishes rankings of various specializations–including a ranking of “intellectual property” programs. Anecdotally, over the years a number of Santa Clara Law high tech students have told me that the specialty rankings influenced their decision to come to SCU.

Unfortunately, the specialty rankings are even less credible than the general rankings. The general rankings are generated using a complex and ever-changing multi-factor formula, which creates a veneer of scientific precision even though the data inputs are often garbage and the assumptions underlying the various factors are mostly stupid. Yet, as bad as that is, it’s a model of rigor compared to the specialty rankings.

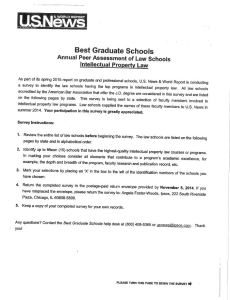

Let’s take a closer look at the “formula” used for the IP specialty rankings. IP specialty ranking voters are asked to do the following:

Identify up to fifteen (15) schools that have the highest-quality intellectual property courses or programs. In making your choices consider all elements that contribute to a program’s academic excellence, for example, the depth and breadth of the program, faculty research and publication record, etc.

See the full ballot.

This instruction is followed by 3 pages listing approximately 200 law schools, organized by state and then alphabetically. I also found Step 5 intriguing: “Keep a copy of your completed survey for your own records”…because…? Can a voter demand a recount later?

There are numerous problems with this survey. Let me highlight two:

* There is a semantic ambiguity about what constitutes an “IP” program and how it relates to “technology” law. Several of the top ranked programs in the IP specialty ranking don’t refer to IP in their program name, such as Stanford’s “Program in Law, Science & Technology,” Berkeley’s “Center for Law & Technology” and Santa Clara’s “High Tech Law Institute.” I believe most voters interpret “IP” to include technology law, even though the ballot’s instructions don’t suggest that.

* how should voters define “quality”? The instructions confusingly say voters can consider “courses” or “programs,” even though those considerations might point in different directions. For example, a school might have excellent faculty members but an average curriculum; or a school could have an awesome roster of courses all staffed by adjuncts. The additional “clarifying” language says voters can consider the “depth and breadth of the program” and faculty research/publications, but those are just more factors that also could point in different directions. So what criteria are voters using, exactly?

Of course, I recognize a tendentious parsing of the ballot’s instructions is meaningless. First, I assume every voter relies on his or her own intuitive sense of what makes for a “good” program, without any consideration of the ballot instructions. Second, and more structurally, voters simply cannot have well-informed views about all 200ish schools. This is a common critique of the general U.S. News ballots, but the critique applies with equal force to the specialty programs.

Because most voters won’t have well-informed views about most programs, the ballot probably devolves into a survey of name recognition and very general impressions–in other words, a popularity contest. I’m amazed when anyone pays close attention to small movements in such an imprecise measure, but perhaps seeing the ballot in all its glory might help discourage such overreliance in the future.

One final point: a few years ago, I was told that U.S. News sent out about 120 ballots for the IP specialty ranking (selected from the ~700 members of the AALS IP Section, even though many section members are only peripherally interested in IP) and had about a 50% return rate. If that’s true, the ranking is based on a total of about 60 votes–in other words, a small dataset that is probably inadequate to produce statistical reliability, and where just a few votes here or there probably can move a school several places up or down in the rankings.